Artificial intelligence-based technology for enhancing the quality of simulation, navigation, and outcome prediction for hepatectomy

Abstract

In the past decade, artificial intelligence (AI)-based technology has been applied to develop a simulation and navigation system and a model for predicting surgical outcomes in hepatobiliary surgery. To identify the intrahepatic vascular structure and accurate liver segmentation and volumetry, AI technology has been applied in three-dimensional (3D) simulation software. Recently, 3D and 4D printing have been used as innovative technologies for tissue and organ fabrication, medical education, and preoperative planning. AI can empower 3D and 4D printing technologies. Attempts have been made to use AI technology in augmented reality for navigating and performing intraoperative ultrasound. To predict surgical outcomes and postoperative early recurrence in patients with hepatocellular carcinoma, a deep learning model can be useful. Indocyanine green fluorescence imaging is used in hepatobiliary surgery to visualize the anatomy of the bile duct, hepatic tumors, and hepatic segmental areas. AI technology was applied to fuse intraoperative near-infrared fluorescence and visible images. Preoperative simulation, intraoperative navigation, and models to predict surgical outcomes using AI technology can be clinically applied in hepatobiliary surgery. As shown in reliable and robust clinical studies, AI can be a useful tool in clinical practice to improve the safety and efficacy of hepatobiliary surgery.

Keywords

INTRODUCTION

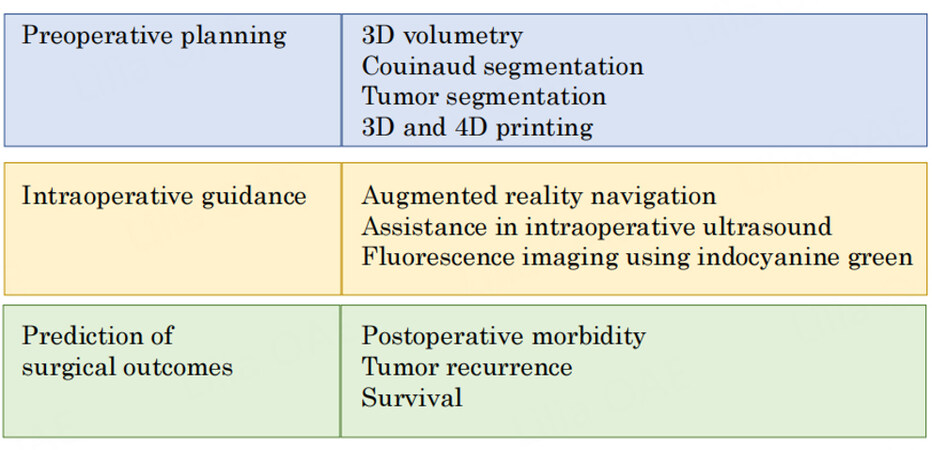

The advancement of artificial intelligence (AI)-based technologies in medicine is progressing rapidly. The concept of AI was introduced as a computer program to simulate human cognitive functions. Machine learning is at the core of AI, and deep learning is an important branch of machine learning[Figure 1]. In hepatobiliary surgery, AI technology using a large number of medical images has recently been applied to develop a simulation and navigation system and a model for predicting surgical outcomes[1][Figure 2]. Three-dimensional (3D) reconstruction based on computed tomography (CT) images are used to calculate future liver remnant volume[2]. AI technology can contribute to the development of 3D reconstruction systems[3,4] and perform liver segmentation, Couinaud segmentation, tumor segmentation, and volumetry[5-8]. AI technology has also been used for augmented reality (AR) navigation systems[9-11]. Three-dimensional printing is an innovative technology for tissue and organ fabrication, medical education, and preoperative planning. Recently, 4D printing has emerged, with the fourth dimension being the time-dependent change in shape after printing. AI-based technology can enhance the accuracy and robustness of 3D- and 4D-printed models. For liver surgery navigation, augmented reality has been applied to provide a semitransparent overlay of the preoperative images of the area of interest, such as liver tumors and vessels[12,13]. Moreover, researchers have attempted to use deep learning to obtain real-time semantic segmentation and improve 3D augmentation[9]. In intraoperative ultrasonography, the use of AI technology can accurately identify focal liver lesions[14]. Several deep learning models have been reported to be useful for predicting postoperative complications and survival outcomes using preoperative medical images[15-17]. The microvascular invasion of hepatocellular carcinoma (HCC) is an indicator of an aggressive tumor, tumor recurrence, and poor survival after surgery. Deep learning-based AI using preoperative CT can predict microvascular invasion and survival outcomes[18,19].

Figure 2. An overview of artificial intelligence techniques used in preoperative planning, intraoperative guidance, and prediction of surgical outcomes.

Intraoperative fluorescence imaging with indocyanine green (ICG) is used to visualize cancerous tissues and anatomic structures[20]. Recently, it was discovered that using signal acquisition and processing technology, the near-infrared fluorescence signal emitted from ICG can be fused with visible light color images. Convolutional neural network (CNN)-based deep learning models have been broadly applied in image processing and computer vision[21].

In this article, we discuss the application of AI-based technology in developing a simulation and navigation, and prediction model for a surgical outcomes system based on preoperative imaging and ICG in hepatobiliary surgery.

APPLICATION OF AI TECHNOLOGY FOR PREOPERATIVE SIMULATION

AI technology for 3D simulation

The intrahepatic vascular structure and accurate liver segmentation and volumetry must be identified to ensure precise and safe liver surgery[Table 1]. Three-dimensional simulation software has been applied to reconstruct intrahepatic structures and calculate future remnant liver volume[22]. Previous studies using deep learning-based algorithms for the automatic extraction of portal veins and hepatic veins found that the deep learning model contributed to reducing the processing time[3,4]. Chen et al. reported that with the use of the residual-dense-attention U-net model, a CNN, accurate segmentation of the liver and liver tumor on CT images could be obtained[5]. Koitka et al. demonstrated that a CNN provided fully automated 3D volumetry of the right and left liver on CT images[6]. Mojtahed et al. proposed a novel medical software (Hepatica) for performing automatic liver volumetry, followed by semiautomatic delineation of the Couinaud segments[7]. CNN can automatically delineate the liver from a 3D T1-weighted magnetic resonance image and segment the volume corresponding to the liver. The new software could accurately delineate the liver and divide the volume into Couinaud segments. Lyu et al. used a novel approach to train convolutional networks for liver tumor segmentation using Couinaud segment annotation, which complies relatively better with the radiologists’ work practice and significantly reduces manual effort[8]. The new method can use these annotations to estimate pseudo tumor masks as pixel-wise supervision for training a fully supervised tumor segmentation model. AI technology has contributed to the extraction of detailed and precise data on vascular vessels and liver segmental volumes.

Selected studies utilizing AI for preoperative 3D simulation in liver surgery

| Reference | AI-based algorithm | Aim | Imagingmodality | Performance |

| Deep learning-based algorithm | Extraction of the PV and HV | CT | Dice coefficient for the PV and HV: 0.90 and 0.94 respectively | |

| AI-assisted reconstruction | Extraction of the IVC, PV, and HV systems | CT | Shorter processing time compared with the manual method (2.1 min vs. 35.0 min, P < 0.001) | |

| Chen et al.[5] | Residual-Dense-Attention U-Net | Segmentation between liver organs and lesions | CT | Overall computational time reduced by about 28% compared with other convolutions; the accuracy of liver and lesion segmentation: 96% and 94.8% with IoU values and 89.5% and 87% compared with AVGDIST values |

| Multi-Resolution U-Net 3D neural networks | Obtain 3D liver volumetry | CT | Sørensen–Dice coefficient: 0.9726 ± 0.0058, | |

| Hepatica: a deep-learning-based liver volume measurement tool | Measurement of segmental liver volume | MRI | Mean Dice score: 0.947 ± 0.010 | |

| Lyu et al.[8] | CouinaudNet: a system that trains convolutional networks for liver tumor segmentation | Segmentation of liver tumors using Couinaud annotation | CT | Dice per case and overall for tumor segmentation: 62.2% and 74.0% respectively on the MSD08 test set and 68.4% and 80.9% on the LiTS test set |

AI technology for 3D and 4D printing

Three-dimensional printing is an innovative technology for tissue and organ fabrication, medical education, and preoperative planning. The use of 3D-printed liver models allows surgeons to obtain accurate information regarding vessel anatomy, the relationship between the tumor and vessels, and the parenchymal transection plane. Surgeons can freely handle the patient’s liver before surgery. In addition, 3D-printed liver models can be used to train new surgeons[23,24]. Various materials such as polymers and hydrogels are used to fabricate the 3D-printed structure, and a complex creation process, such as the extrusion of feedstock material and building components layer by layer with dimensional accuracy, is needed. Meiabadi et al. reported that an artificial neural network-based method can enhance the accuracy of modeling for toughness, part thickness, and production cost-dependent variables[25]. Rojek et al. showed the utility of AI-based design for 3D printing in saving materials and reducing waste[26]. Recently, 4D printing has emerged, in which the fourth dimension of time is added to 3D printing, connecting the change of shape, properties, and functionality of the printed material over time following stimuli. An AI algorithm can be used to determine the best design of the toolpath and the stimuli-responsive material distribution, allowing precise shape control of the 4D-printed structure. AI technology can also ameliorate the design of 4D printing using a library of previous scans of the target region of interest and coupling it with incomplete anatomy scan data to reconstruct a patient-specific 4D-printing model. AI-based 4D printing can improve the form and function of the materials in shape- changing and shape memory[27].

APPLICATION OF AI TECHNOLOGY FOR INTRAOPERATIVE NAVIGATION

AI technology for AR and intraoperative ultrasound

Intraoperative navigation techniques, which began with intraoperative ultrasound, may help surgeons perform liver surgery. Recently, AR has been applied to assist the operator in minimally invasive surgery. Liver tumors and vascular and biliary structures reconstructed using preoperative CT images are projected on the liver surface during liver parenchymal transection[28]. Adballah et al. used AR software during the laparoscopic resection of liver tumors[29]. Pseudotumor was created in sheep cadaveric liver, and a virtual preoperative 3D model was reconstructed using CT imaging. When the tumor image and 1-cm peritumoral margins were projected onto the liver surface during AR laparoscopic liver resection, the resection margins were more accurate and had less variability than those obtained using standard ultrasonographic navigation. CNN has been used to obtain real-time semantic segmentation of the scene and improve the precision of the subsequent 3D enhancement for an in-vivo robot-assisted radical prostatectomy[9]. Lin et al. proposed using a dual-modality endoscopic probe for tissue surface shape reconstruction and hyperspectral imaging enabled by a CNN model[10]. Structured light images are used to recover the depth maps of tissue surfaces using a fully convolutional network. The spectrographic and RGB images were jointly processed by a CNN-based super-resolution model to generate pixel-level dense hypercubes. By combining the depth maps and hypercubes using AR, surgeons can visualize the recovered 3D surfaces, narrow-band images, and oxygen saturation maps. Luo et al. evaluated the utility and accuracy of the proposed AR navigation system for performing liver resection by a stereoscopic laparoscope using five modules: hand-eye calibration, preoperative image segmentation, intraoperative liver surface reconstruction, image-to-patient registration, and AR navigation[11]. An automatic CNN-based algorithm was used to segment the liver model using preoperative CT images. An unsupervised CNN framework was introduced to estimate the depth while reconstructing the intraoperative 3D model for registration. AI systems have also been applied in intraoperative ultrasound. Barash et al. developed an AI system to detect liver lesions in intraoperative ultrasound. The area under the curve (AUC) of the algorithm performance was 80.2%, and the overall classification accuracy was 74.6%. The algorithm was found to assist in identifying focal liver lesions in intraoperative ultrasound performed by the liver surgeon[14].

AI technology for fluorescence imaging using ICG

ICG is mainly used as a fluorogenic reagent for fluorescence imaging-guided surgery. Protein-bound ICG emits fluorescence that peaks at approximately 840 nm when illuminated with near-infrared light

The CNN architecture has also been applied to fluorescence lifetime imaging microscopy (FLIM). FLIM is an imaging technique that uses the inherent properties of fluorescent dyes. It identifies different intensity patterns and the lifetime of autofluorescence between cancerous tissues, margins, and normal tissues[38]. CNNs can reduce the acquisition time required to reconstruct pixel raw fluorescence data into intensity and lifetime images[39]. Marden et al. reported that a CNN allows for accurate and rapid localization and visualization of aiming beam segmentation during FLIM acquisition[40].

APPLICATION OF AI TECHNOLOGY TO PREDICT SURGICAL OUTCOMES

AI is also being used to predict postoperative morbidity and recurrence after liver surgery[Table 2]. When used as a mathematical tool, an artificial neural network model can predict postoperative liver failure and early recurrence after hepatic resection of HCC[15,16]. In previous reports, AI-based models using the machine learning technique were able to predict postoperative morbidity after liver, pancreatic, and colorectal surgery with a C-statistic value of 0.74[17]. Li et al. developed a deep CNN nomogram that predicted microvascular invasion in HCC and survival outcomes including recurrence-free survival and overall survival based on contrast-enhanced CT image and clinical variables[19]. The AUC value was 0.897 in the validation cohort. Wakiya et al. reported the use of a deep learning model to predict early postoperative recurrence after resection of intrahepatic cholangiocarcinoma using plain CT imaging from 41 patients. The average sensitivity, specificity, and accuracy were 97.8%, 94.0%, and 96.5%, respectively[41].

AI technology to predict surgical outcomes in patients with hepatocellular carcinoma

| Reference | AI-based algorithm | Predicted object | Incorporated variables | Performance |

| Mai et al.[15] | ANN model | Post-hepatectomy early recurrence (within two years) | γ-GTP, AFP, tumor size, tumor differentiation, MVI, satellite nodules, and blood loss | AUC: 0.753 in the derivation cohort and 0.736 in the validation cohort |

| Mai et al.[16] | ANN model | Postoperative severe liver failure# | Plt, PT, T-Bil, AST, standardized future liver remnant | AUC: 0.880 in the development set and 0.876 in the validation set |

| Li et al.[19] | DCNN | Microvascular invasion, DFS, and OS | Clinicoradiologic features | AUC of DCNN nomogram: 0.929 in the training cohort and 0.865 in the validation cohort; the DFS and OS differed significantly between the DCNN-nomogram-predicted groups with and without MVI |

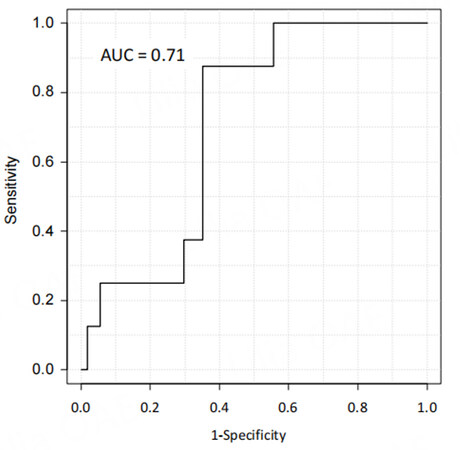

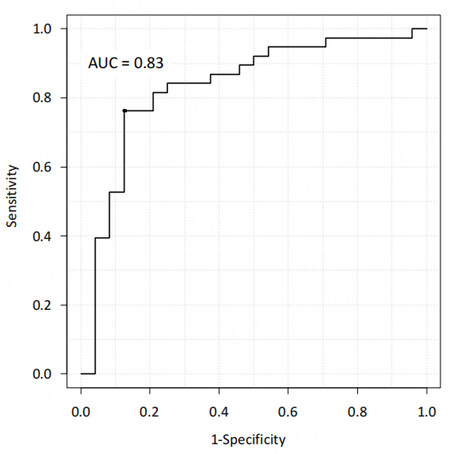

| Our data (unpublished) | AI model implemented using CNNs and multilayer perception as a classifier | Postoperative complications of Clavien-Dindo classification II or higher and intraoperative blood loss | Arterial preoperative CECT imaging phase, sex, age, body mass index, preoperative ASA physical status classification, diabetes mellitus, serum ALT, Child-Pugh classification, Plt, and laparoscopic approach | AUC: 0.71 for postoperative complications and 0.83 for major blood loss |

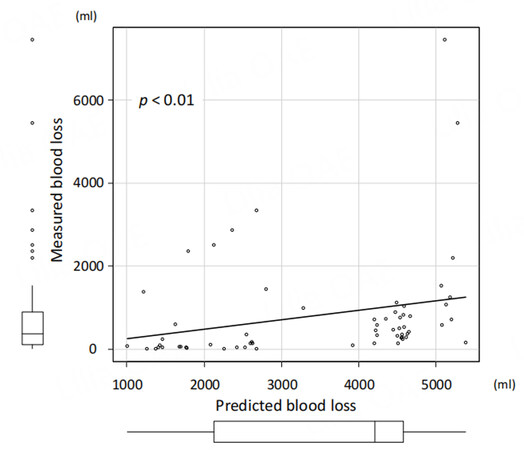

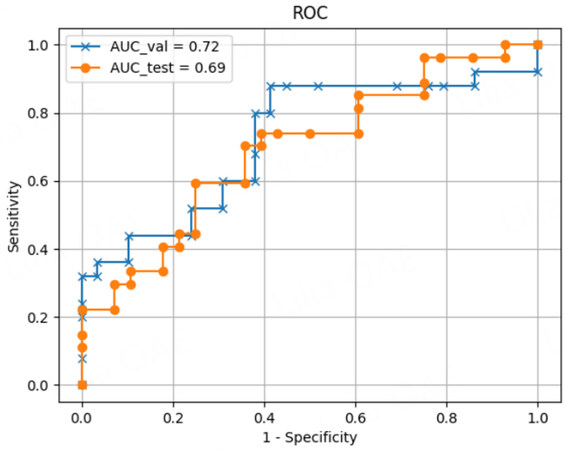

We have recently developed deep learning models based on contrast-enhanced CT imaging to predict surgical outcomes and postoperative early recurrence in patients undergoing hepatic resection for HCC. The data of 543 patients who underwent initial hepatectomy for HCC were randomly classified into the training, validation, and test datasets in a ratio of 8:1:1. Arterial preoperative contrast-enhanced CT imaging phases and several clinical variables, including sex, age, body mass index, preoperative American Society of Anesthesiologists physical status classification, the presence of diabetes mellitus, serum alanine aminotransferase level, Child-Pugh classification status, platelet count, and laparoscopic approach, were used to create the model for predicting surgical risk. The surgical risk was assessed using intraoperative blood loss and postoperative complications of Clavien-Dindo classification II or higher. The deep learning model predicting both major blood loss and postoperative blood loss was developed using a dense convolutional network with explanatory variables including clinical data and contrast-enhanced CT imaging. To evaluate the predictive performance of differential models, we applied the receiver operating characteristic (ROC) curves and their AUC values. The AUCs of the predictive model for postoperative complications and major blood loss were 0.71 and 0.83, respectively [Figures 3 and 4]. Using the deep learning model, the predicted blood loss was significantly correlated with measured blood loss during hepatic resection [P < 0.01; Figure 5].

Figure 3. Receiver operating characteristic curve of the deep learning model to predict postoperative complications after hepatic resection of hepatocellular carcinoma with the area under the curve value.

Figure 4. Receiver operating characteristic curve of the deep learning model to predict major blood loss after hepatic resection of hepatocellular carcinoma with the area under the curve value.

Figure 5. Correlation between predicted blood loss and measured blood loss during hepatic resection of hepatocellular carcinoma.

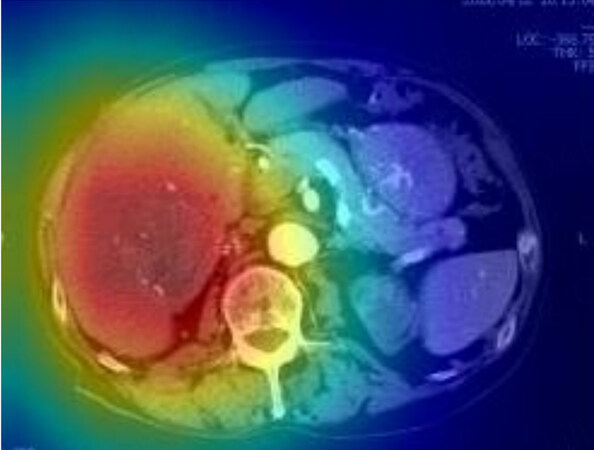

We developed the predictive model for the early recurrence of HCC by performing a deep learning analysis using a dense convolutional network as a training dataset with explanatory variables, including clinical data and saliency heat maps [Figure 6]. The data of 543 patients who underwent initial hepatectomy for HCC were randomly classified into the training, validation, and test datasets in a ratio of 8:1:1. Arterial preoperative contrast-enhanced CT imaging phases and several clinical variables, including sex, age, serum alanine aminotransferase and alpha-fetoprotein, Child-Pugh classification, and platelet count, were used to develop the predictive model for early HCC recurrence. This study defined postoperative early recurrence as intra- or extrahepatic recurrence of HCC within the first 2 postoperative years. This deep learning model demonstrated high accuracy for predicting early recurrence (within 1 year after surgery) by the ROC curve analysis with the area under the ROC curve values of 0.69 in the test dataset and 0.72 in the validation dataset [Figure 7]. Thus, deep learning-based AI using preoperative CT can be useful for predicting the early recurrence of HCC after surgery.

Figure 6. Saliency heat map of representative patients using the deep learning model. The red color highlights the region of interest to predict early recurrence.

FUTURE PERSPECTIVES

It is hoped that AI will provide better and more individualized planning for each patient undergoing hepatobiliary surgery. In hepatobiliary surgery, significant progress has been made in preoperative simulation, intraoperative navigation, and prediction of surgical outcomes using AI. However, most studies on AI-based technology in hepatobiliary surgery had a retrospective design. Thus, to acquire reliable results, it is desirable to perform future studies on large patient populations collected in a prospective multicenter trial. Through reliable and robust clinical studies, AI can be a useful tool in clinical practice for improving the safety and efficacy of hepatobiliary surgery.

DECLARATIONS

Authors’ contributionsMade substantial contributions to the conception and design of the study and performed data analysis and interpretation: Shinkawa H, Ishizawa T

Performed data acquisition, as well as providing administrative, technical, and material support: Shinkawa H, Ishizawa T

Availability of data and materialsNot applicable.

Financial support and sponsorshipNone.

Conflicts of interestAll authors declared that there are no conflicts of interest.

Ethical approval and consent to participateNot applicable.

Consent for publicationNot applicable.

Copyright© The Author(s) 2023.

REFERENCES

1. Gumbs AA, Alexander F, Karcz K, et al. White paper: definitions of artificial intelligence and autonomous actions in clinical surgery. Art Int Surg 2022;2:93-100.

2. Mise Y, Hasegawa K, Satou S, et al. How has virtual hepatectomy changed the practice of liver surgery? Ann Surg 2018;268:127-33.

3. Kazami Y, Kaneko J, Keshwani D, et al. Artificial intelligence enhances the accuracy of portal and hepatic vein extraction in computed tomography for virtual hepatectomy. J Hepatobiliary Pancreat Sci 2022;29:359-68.

4. Takamoto T, Ban D, Nara S, et al. Automated three-dimensional liver reconstruction with artificial intelligence for virtual hepatectomy. J Gastrointest Surg 2022;26:2119-27.

5. Chen WF, Ou HY, Lin HY, et al. Development of novel residual-dense-attention (RDA) U-net network architecture for hepatocellular carcinoma segmentation. Diagnostics 2022;12:1916.

6. Koitka S, Gudlin P, Theysohn JM, et al. Fully automated preoperative liver volumetry incorporating the anatomical location of the central hepatic vein. Sci Rep 2022;12:16479.

7. Mojtahed A, Núñez L, Connell J, et al. Repeatability and reproducibility of deep-learning-based liver volume and Couinaud segment volume measurement tool. Abdom Radiol 2022;47:143-51.

8. Lyu F, Ma AJ, Yip TC, Wong GL, Yuen PC. Weakly supervised liver tumor segmentation using Couinaud segment annotation. IEEE Trans Med Imaging 2022;41:1138-49.

9. Tanzi L, Piazzolla P, Porpiglia F, Vezzetti E. Real-time deep learning semantic segmentation during intra-operative surgery for 3D augmented reality assistance. Int J Comput Assist Radiol Surg 2021;16:1435-45.

10. Lin J, Clancy NT, Qi J, et al. Dual-modality endoscopic probe for tissue surface shape reconstruction and hyperspectral imaging enabled by deep neural networks. Med Image Anal 2018;48:162-76.

11. Luo H, Yin D, Zhang S, et al. Augmented reality navigation for liver resection with a stereoscopic laparoscope. Comput Methods Programs Biomed 2020;187:105099.

12. Bertrand LR, Abdallah M, Espinel Y, et al. A case series study of augmented reality in laparoscopic liver resection with a deformable preoperative model. Surg Endosc 2020;34:5642-8.

13. Phutane P, Buc E, Poirot K, et al. Preliminary trial of augmented reality performed on a laparoscopic left hepatectomy. Surg Endosc 2018;32:514-5.

14. Barash Y, Klang E, Lux A, et al. Artificial intelligence for identification of focal lesions in intraoperative liver ultrasonography. Langenbecks Arch Surg 2022;407:3553-60.

15. Mai RY, Zeng J, Meng WD, et al. Artificial neural network model to predict post-hepatectomy early recurrence of hepatocellular carcinoma without macroscopic vascular invasion. BMC Cancer 2021;21:283.

16. Mai RY, Lu HZ, Bai T, et al. Artificial neural network model for preoperative prediction of severe liver failure after hemihepatectomy in patients with hepatocellular carcinoma. Surgery 2020;168:643-52.

17. Merath K, Hyer JM, Mehta R, et al. Use of machine learning for prediction of patient risk of postoperative complications after liver, pancreatic, and colorectal surgery. J Gastrointest Surg 2020;24:1843-51.

18. Chu T, Zhao C, Zhang J, et al. Application of a convolutional neural network for multitask learning to simultaneously predict microvascular invasion and vessels that encapsulate tumor clusters in hepatocellular carcinoma. Ann Surg Oncol 2022;29:6774-83.

19. Li X, Qi Z, Du H, et al. Deep convolutional neural network for preoperative prediction of microvascular invasion and clinical outcomes in patients with HCCs. Eur Radiol 2022;32:771-82.

20. Ishizawa T, Saiura A. Fluorescence imaging for minimally invasive cancer surgery. Surg Oncol Clin N Am 2019;28:45-60.

21. Zhang C, Wang K, Tian J. Adaptive brightness fusion method for intraoperative near-infrared fluorescence and visible images. Biomed Opt Express 2022;13:1243-60.

22. Bari H, Wadhwani S, Dasari BVM. Role of artificial intelligence in hepatobiliary and pancreatic surgery. World J Gastrointest Surg 2021;13:7-18.

23. Christou CD, Tsoulfas G. Role of three-dimensional printing and artificial intelligence in the management of hepatocellular carcinoma: challenges and opportunities. World J Gastrointest Oncol 2022;14:765-93.

24. Saito Y, Shimada M, Morine Y, Yamada S, Sugimoto M. Essential updates 2020/2021: current topics of simulation and navigation in hepatectomy. Ann Gastroenterol Surg 2022;6:190-6.

25. Meiabadi MS, Moradi M, Karamimoghadam M, et al. Modeling the producibility of 3D printing in polylactic acid using artificial neural networks and fused filament fabrication. Polymers 2021;13:3219.

26. Rojek I, Mikołajewski D, Kopowski J, Kotlarz P, Piechowiak M, Dostatni E. Reducing waste in 3D printing using a neural network based on an own elbow exoskeleton. Materials 2021;14:5074.

27. Pugliese R, Regondi S. Artificial intelligence-empowered 3D and 4D printing technologies toward smarter biomedical materials and approaches. Polymers 2022;14:2794.

28. Giannone F, Felli E, Cherkaoui Z, Mascagni P, Pessaux P. Augmented reality and image-guided robotic liver surgery. Cancers 2021;13:6268.

29. Adballah M, Espinel Y, Calvet L, et al. Augmented reality in laparoscopic liver resection evaluated on an ex-vivo animal model with pseudo-tumours. Surg Endosc 2022;36:833-43.

30. Landsman ML, Kwant G, Mook GA, Zijlstra WG. Light-absorbing properties, stability, and spectral stabilization of indocyanine green. J Appl Physiol 1976;40:575-83.

31. Ishizawa T, Tamura S, Masuda K, et al. Intraoperative fluorescent cholangiography using indocyanine green: a biliary road map for safe surgery. J Am Coll Surg 2009;208:e1-4.

32. Ishizawa T, Bandai Y, Kokudo N. Fluorescent cholangiography using indocyanine green for laparoscopic cholecystectomy: an initial experience. Arch Surg 2009;144:381-2.

33. Ishizawa T, Bandai Y, Ijichi M, Kaneko J, Hasegawa K, Kokudo N. Fluorescent cholangiography illuminating the biliary tree during laparoscopic cholecystectomy. Br J Surg 2010;97:1369-77.

34. Kono Y, Ishizawa T, Tani K, et al. Techniques of fluorescence cholangiography during laparoscopic cholecystectomy for better delineation of the bile duct anatomy. Medicine 2015;94:e1005.

35. Terasawa M, Ishizawa T, Mise Y, et al. Applications of fusion-fluorescence imaging using indocyanine green in laparoscopic hepatectomy. Surg Endosc 2017;31:5111-8.

36. Liu Y, Dong L, Ji Y, Xu W. Infrared and visible image fusion through details preservation. Sensors 2019;19:4556.

37. Shen B, Zhang Z, Shi X, et al. Real-time intraoperative glioma diagnosis using fluorescence imaging and deep convolutional neural networks. Eur J Nucl Med Mol Imaging 2021;48:3482-92.

38. Young K, Ma E, Kejriwal S, Nielsen T, Aulakh SS, Birkeland AC. Intraoperative

39. Ochoa M, Rudkouskaya A, Yao R, Yan P, Barroso M, Intes X. High compression deep learning based single-pixel hyperspectral macroscopic fluorescence lifetime imaging in vivo. Biomed Opt Express 2020;11:5401-24.

40. Marsden M, Fukazawa T, Deng YC, et al. FLImBrush: dynamic visualization of intraoperative free-hand fiber-based fluorescence lifetime imaging. Biomed Opt Express 2020;11:5166-80.

Cite This Article

Export citation file: BibTeX | RIS

OAE Style

Shinkawa H, Ishizawa T. Artificial intelligence-based technology for enhancing the quality of simulation, navigation, and outcome prediction for hepatectomy. Art Int Surg 2023;3:69-79. http://dx.doi.org/10.20517/ais.2022.37

AMA Style

Shinkawa H, Ishizawa T. Artificial intelligence-based technology for enhancing the quality of simulation, navigation, and outcome prediction for hepatectomy. Artificial Intelligence Surgery. 2023; 3(2): 69-79. http://dx.doi.org/10.20517/ais.2022.37

Chicago/Turabian Style

Shinkawa, Hiroji, Takeaki Ishizawa. 2023. "Artificial intelligence-based technology for enhancing the quality of simulation, navigation, and outcome prediction for hepatectomy" Artificial Intelligence Surgery. 3, no.2: 69-79. http://dx.doi.org/10.20517/ais.2022.37

ACS Style

Shinkawa, H.; Ishizawa T. Artificial intelligence-based technology for enhancing the quality of simulation, navigation, and outcome prediction for hepatectomy. Art. Int. Surg. 2023, 3, 69-79. http://dx.doi.org/10.20517/ais.2022.37

About This Article

Copyright

Data & Comments

Data

Cite This Article 11 clicks

Cite This Article 11 clicks

Comments

Comments must be written in English. Spam, offensive content, impersonation, and private information will not be permitted. If any comment is reported and identified as inappropriate content by OAE staff, the comment will be removed without notice. If you have any queries or need any help, please contact us at support@oaepublish.com.